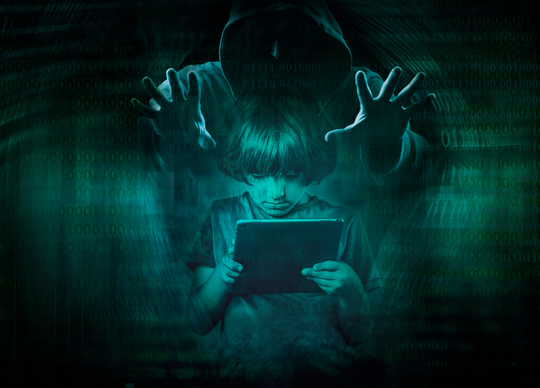

Explicit and disturbing content is being “served up” to children online and the kids themselves are calling on adults in government and tech companies to step in to protect them, according to a new international report.

Experts are calling for a clear duty of care after research conducted with some of society’s most vulnerable children and youth found more than 70 per cent have seen content online that they found concerning, including violent and explicit content.

“Adults and the law are always 10 steps behind. Kids are telling us that they aren’t all right online. Yet companies lack transparency and accountability,” report author, Dr Faith Gordon from ANU College of Law, said.

“Alarmingly, we found children as young as two or three years old have been exposed to really violent and sexually explicit content. Often, young people have to navigate distressing pushed content. Children also talked about experiencing unwanted contact, often from adults posing as children or being bombarded with scams.”

The report found only 40 per cent of children who experienced online harm reported it to the platforms they were using. Young people who complain typically report feeling “re-victimised” due to no response or automated replies and inaction from online platforms.

“Young people questioned why companies do not appear to be held to account. They want tech companies and the government to urgently address these issues and they want their voices and suggestions to be heard,” Dr Gordon said.

“There needs to be a clear duty of care and companies need to be much more transparent. This needs to be coupled with a legislative framework, which upholds and promotes the rights of children.”

The qualitative research, conducted in the UK during COVID-19 lockdowns, calls for children’s and young people’s voices to be at the centre of discussions on all policy reforms in this area.

Dr Gordon said there has never been a more crucial time to regulate our online spaces and educate wider society about acceptable use online, as the pandemic has caused a need for children to be on the internet.

“Children told us they want to be safe online and that’s up to governments, policymakers and the tech companies to genuinely listen and address their concerns in partnership with them,” she said.

“Children’s rights need to be considered in making reforms and that is what is missing in the frameworks that currently exist in Australia.

“Countries, such as Australia and the UK, who signed up to the UN Convention on Rights of the Child in 1990 are obligated to ensure that it is embedded into any frameworks moving forward. The latest UN General Comment on children’s rights in the digital environment needs to be implemented on the ground.”

Dr Gordon said although parts of Australia’s e-safety model are leading the way internationally, there are “grey areas” around legal but harmful content online and how rights-based approaches work in practice.

“In Australia the spotlight is now on new privacy legislation. Proposals will require social media companies, under law, to act in the best interests of children when accessing their data.

“This is a positive move, but as the study shows we need to see other agencies being held to account on this too, including the education tech sector and gaming platforms.

“We know from this report that children and young people can get around verification processes. There needs to be a more rigorous process for their age group, as well as for adults who should also be verifying their age.”